How to Build an Artificial Intelligence Governance Framework

Artificial Intelligence (AI) is not just something for the future; it is already shaping how we live and work. From apps that suggest your next favorite show to tools that help doctors predict illnesses, AI is everywhere. However, the reality is that AI can accomplish amazing things, yet it can also create serious problems if not managed carefully. Many organizations have successfully built AI models that transformed their business, while others have struggled because governance was not part of the plan. This leads to an important question every organization must ask: how do you build an artificial intelligence governance framework that keeps AI smart, safe, and trustworthy?

This article provides step-by-step guidance, supported by real-world situations and practical insights, to enhance your understanding.

AI governance is the set of rules, processes, and guidelines that help organizations use artificial intelligence in a responsible and controlled way. For an AI application developer, AI governance provides a clear framework to ensure systems are fair, reliable, secure, and aligned with business goals. It clearly defines how AI should be built, used, monitored, and improved over time.

It ensures that AI systems are fair, reliable, secure, and aligned with business goals. Simply put, AI governance defines how AI should be built, used, monitored, and improved over time.

Governance may sound like a complex corporate term, but at its core, it is about making sure AI behaves the way it is intended to. AI systems often make decisions that directly affect people, money, and reputations. Without proper governance, AI can produce biased outcomes, misuse data, or make decisions that are difficult to explain. These issues can lead to legal risks, loss of customer trust, and long-term damage to a company’s reputation.

This is why AI governance matters. Studies by McKinsey and MIT estimate that 70–85% of AI initiatives fail, mainly because of poor data quality, unclear objectives, and limited executive support.

Strong AI governance helps organizations avoid these risks while building trust and confidence in their AI systems. It ensures AI is transparent, ethical, and dependable. It also clarifies who is responsible for AI decisions, how risks are managed, and how performance is regularly reviewed.

For example, while working with a fintech startup on an AI-based loan approval system, early testing showed the model was rejecting qualified applicants due to hidden data bias. By introducing AI governance early, false rejections were reduced by 30%, and trust was built with both customers and regulators.

In short, with the right governance in place, businesses can innovate with confidence knowing their AI systems are responsible, transparent, and built for long-term success.

You may also want to know about ERP AI Chatbots

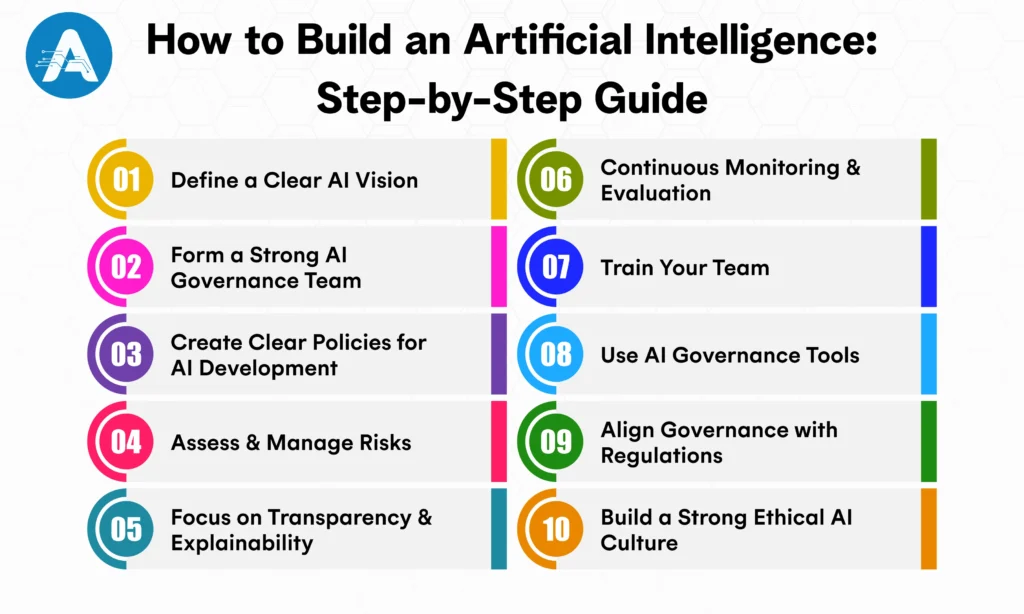

This step-by-step guide explains how to build an artificial intelligence system, from planning and data preparation to model development and deployment. It helps businesses and beginners understand the AI building process clearly and practically.

Before you build an artificial intelligence, you need a clear purpose. Ask yourself:

For example, a healthcare company wanted to build an AI system for patient diagnosis. Their vision was clear and practical: AI should support doctors, not replace them, while ensuring safety, transparency, and fairness.

To successfully build an AI model, you need the right people guiding it. Your AI governance team should include:

Example: A fintech company built an AI model for loan approvals. By involving legal, ethics, and business teams, they reduced bias and built customer trust.

Policies are the foundation of build an artificial intelligence. They define how your AI is built, deployed, and managed. Focus on:

For example, when a retail company decided to build an AI app for personalized marketing, clear policies helped prevent bias and protect customer trust. This approach made their AI building process ethical, transparent, and effective.

AI systems are not perfect, and failures can happen. Risk management helps protect the business from unexpected issues.

For example, Uber’s AI-based pricing system once caused unfair surge pricing. Strong governance and risk controls could have reduced customer backlash and reputational damage.

AI should not work like a black box. When AI decisions are clear and understandable, trust naturally increases.

For example, in healthcare, explainable AI helps doctors understand why a patient is marked as high risk, making AI decisions more reliable and accepted.

AI systems change over time, so governance must be ongoing.

For example, Companies like Netflix continuously monitor recommendation systems to ensure content remains relevant and fair, showing how AI can be managed responsibly.

AI governance works best when employees understand how AI systems function and make decisions.

Training should focus on:

For example, Organizations that invest in AI training often see better adoption and fewer mistakes because employees become active contributors to responsible AI use.

Technology plays an important role in managing AI governance.

For example, AI governance tools from leading technology providers help make build an artificial intelligence safer, clearer, and more controlled.

AI regulations are evolving quickly, and businesses must stay prepared.

For example, when governance and compliance work together, organizations can avoid legal risks and move forward with confidence.

AI governance goes beyond policies and processes. It is also about building the right mindset across the organization.

Encourage teams to think critically and ask simple but important questions:

For example, A strong ethical culture supports technical governance and helps build long-term trust in AI systems.

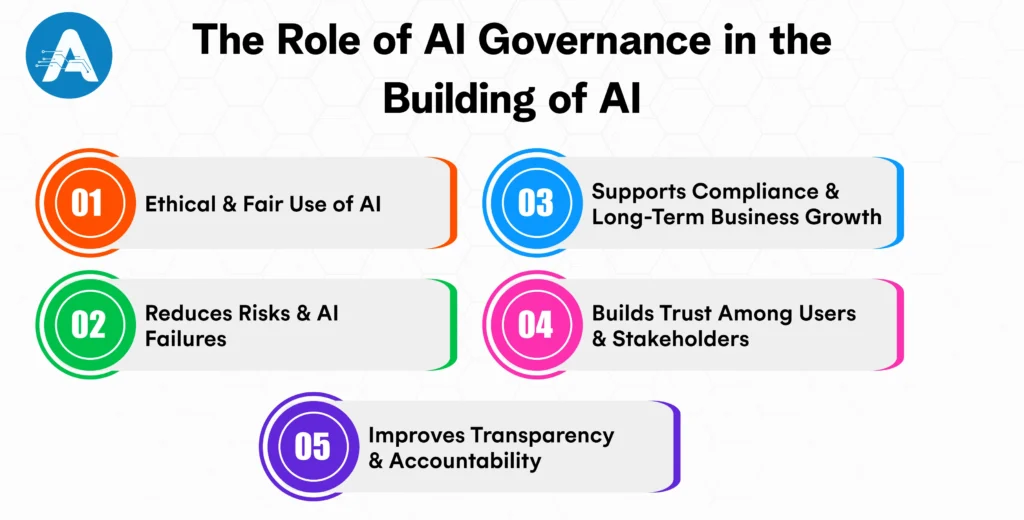

For an AI development company, AI governance guides the building of AI by setting clear rules for responsible and ethical development. It helps reduce risks, improve transparency, and ensure AI systems are trustworthy.

An AI governance framework helps ensure that AI systems treat everyone fairly. It reduces the risk of bias, discrimination, and unfair outcomes by establishing clear rules for data use, model design, and decision-making. This is especially important in areas like hiring, lending, healthcare, and customer service, where AI decisions can directly affect people’s lives.

AI governance helps identify and manage risks such as incorrect predictions, system failures, data misuse, or security issues. By implementing controls, reviews, and monitoring, organisations can identify problems early and prevent costly mistakes that could impact operations or brand image.

AI regulations are increasing worldwide. An AI governance framework helps organizations stay compliant with current and future laws while aligning AI initiatives with business goals and values. This allows companies to innovate safely, scale AI faster, and gain a long-term competitive advantage.

Trust is critical for AI adoption. When AI systems are governed properly, customers, employees, and partners feel more confident using them. Governance ensures transparency, clear ownership, and responsible use of AI, which strengthens trust and protects the organization’s reputation.

With a governance framework, AI decisions are easier to understand and explain. It clearly defines who is responsible for AI outcomes and how decisions are made. This transparency makes it easier to review AI behavior, handle complaints, and ensure accountability across teams.

AI application development services should focus on building solutions that are ethical, transparent, and aligned with business goals. Strong governance ensures data privacy, fairness, and human oversight throughout the AI development lifecycle. This approach builds trust, reduces risk, and enables responsible, scalable AI adoption.

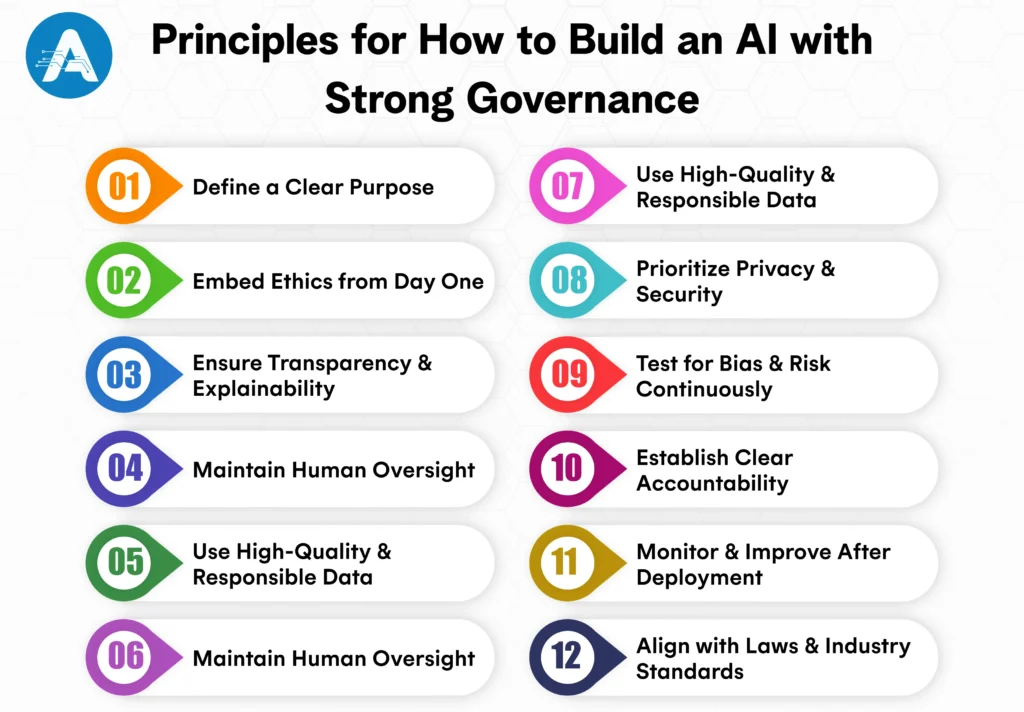

When building an AI system, it is important to start with a clearly defined purpose. The AI should address a specific business or user problem and support meaningful outcomes. Its goals must align with organizational values and ethical standards. A well-defined purpose helps guide development decisions and prevents misuse or unnecessary complexity.

Ethics should be integrated into AI development from the very beginning, not added later. Fairness, inclusivity, and responsible decision-making must guide data selection and model design. Addressing ethical risks early helps reduce bias and builds trust. This approach ensures the AI system behaves responsibly throughout its lifecycle.

AI systems should be designed so their decisions can be understood by users and stakeholders. Transparency helps explain how outcomes are generated and why certain decisions are made. This is especially important in high-impact areas such as healthcare or finance. Explainable AI builds confidence and strengthens governance.

AI should assist human decision-making rather than replace accountability. Clear processes must be in place for human review and intervention when needed. Critical decisions should always involve human judgment. This ensures ethical control and prevents over-reliance on automated systems.

The effectiveness of AI depends heavily on the quality of data used. Data should be accurate, unbiased, and collected through legal and ethical means. Poor-quality or biased data can lead to unreliable and unfair outcomes. Responsible data practices are essential for strong AI governance.

Protecting user data must be a top priority when building AI systems. Privacy-by-design principles should be embedded throughout the development process. Strong security measures help prevent data breaches and misuse. Compliance with data protection regulations further strengthens trust and governance.

AI governance does not end after deployment. Regular testing is required to identify bias, performance issues, and emerging risks. As data and usage patterns change, models must be reassessed and improved. Continuous evaluation ensures the AI remains fair, reliable, and safe.

Clear ownership of AI systems is essential for effective governance. Organizations must define who is responsible for AI decisions, performance, and failures. Accountability ensures quicker issue resolution and better risk management. It also reinforces trust among users and stakeholders.

Once deployed, AI systems must be continuously monitored to ensure consistent performance. Changes in data or environment can impact accuracy and fairness. Regular updates and improvements help maintain alignment with governance standards. Ongoing monitoring supports long-term success.

AI systems must comply with applicable laws, regulations, and industry guidelines. Legal alignment reduces operational and reputational risks. Industry standards also promote ethical and responsible AI use. Compliance helps organizations build trust and scale AI solutions confidently.

The future of AI governance will focus on balancing innovation with responsibility as AI becomes central to business and society. Governments and organizations will introduce clearer regulations, global standards, and ethical frameworks to support safe and responsible AI development without limiting growth.

AI governance will increasingly prioritize transparency, explainability, and accountability, especially when building high-risk AI systems. Organizations will be expected to clearly document how AI models are built, trained, and deployed. Regular audits and impact assessments will become a standard part of AI application development services.

Another major shift will be toward continuous and automated AI governance. Instead of one-time compliance checks, businesses will use monitoring tools to track bias, security, and performance in real time. This ensures AI systems remain compliant, fair, and reliable as they evolve.

Overall, the future of AI governance will be more human-centric and collaborative. Strong human oversight, cross-functional teams, and cooperation between regulators, developers, and businesses will be essential. This approach will build trust, enable responsible AI adoption, and support long-term, sustainable AI growth.

Partnering with Artoon ensures a structured and reliable approach when you build an artificial intelligenceor work on AI projects. Artoon combines deep expertise in AI development with strong governance frameworks, helping organizations reduce risks, ensure ethical practices, and maintain transparency throughout the AI lifecycle.

Moreover, with Artoon, businesses can confidently build an AI, knowing that every model, app, or system follows best practices for compliance, accountability, and performance. From designing AI solutions to monitoring and improving them, Artoon provides the guidance and tools needed for safe, trustworthy, and scalable AI.

Furthermore, choosing Artoon for AI governance ensures your AI initiatives are not only innovative but also aligned with business goals, ethical standards, and regulatory requirements, turning AI projects into long-term value for your organization.

AI governance is no longer optional; it is a critical part of building responsible and successful AI systems. A strong governance framework helps reduce risks, improve transparency, and ensure AI solutions remain ethical, reliable, and aligned with business goals. It also builds trust among users, customers, and stakeholders while supporting long-term growth.

By embedding governance into every stage of AI development, organizations can innovate with confidence. The right approach to AI governance ensures that artificial intelligence is not only powerful but also safe, compliant, and built for long-term success.

Get clarity on use cases, architecture, costs, and timelines with insights from 50+ real-world AI implementations.

To build an artificial intelligence system responsibly, organizations must combine strong technical development with AI governance. This includes using quality data, reducing bias, ensuring transparency, and continuously monitoring AI performance to align with ethical and business goals.

AI governance supports building AI models by setting standards for fairness, accuracy, and explainability. It also ensures models are regularly reviewed and updated to maintain performance and trust.

AI governance is important when you build an AI because it helps control risks such as biased decisions, data misuse, and system failures. It ensures AI systems are reliable, compliant, and trusted by users and stakeholders.

When learning how to build an AI app, governance should be integrated from the start. This includes setting clear objectives, protecting user data, testing for bias, and monitoring app performance after deployment.

Yes, AI governance is essential for businesses involved in AI building. It ensures AI initiatives align with business goals, meet regulations, and deliver consistent value without unnecessary risk.

Monitoring is critical in AI building because models can degrade over time due to new data or changing conditions. Governance frameworks provide processes to track accuracy, fairness, and reliability continuously.

Yes, governance makes AI building faster and safer by providing structured processes, early risk detection, and guidelines for responsible development. This allows teams to innovate confidently while avoiding costly mistakes.

Knowing how to build an AI model responsibly requires integrating governance at every stage. Governance ensures that data, algorithms, and outputs meet ethical, legal, and operational standards. It reduces bias, improves explainability, and maintains consistent performance, allowing AI models to deliver reliable and trustworthy results.

Continue exploring AI and technology insights

Design workflows are evolving at breakneck speed. AI Image Generators have officially transitioned from experimental “toys” to essential everyday assets for modern creatives. The…

Have you ever questioned yourself about how AI systems can make a decision that you can actually trust, such as warning about a fraudulent…

Many AI apps for iPhone are very good, but it’s more difficult to decide which ones are worth keeping. With hundreds of AI apps…