Pgvector vs Pinecone: Choosing the Right Vector Database

Choosing between Pgvector vs Pinecone is one of the most significant decisions an AI engineer will make. While one is a precision-engineered tool for high-dimensional math, the other is an organic extension of the world’s most trusted database.

Curiously, the word Pinecone isn’t just a tech brand; it’s a symbol of nature’s most efficient data storage. Just as a pine tree with pine cones protects its genetic data through the harshest seasons, your choice of a vector database determines how resilient and scalable your AI applications will be.

As an AI development company seeking to build future-proof solutions, understanding these structural foundations is paramount.

In this guide, we’ll explore the technical landscape of Pgvector and Pinecone, examining why the significance of pine cones, from their sacred geometry to their protective scales, actually offers a perfect metaphor for modern data architecture.

Before choosing between Pgvector vs Pinecone, it is essential to understand the architectural philosophy behind each. Let’s start with the integrated approach, where vector search grows from an existing relational foundation.

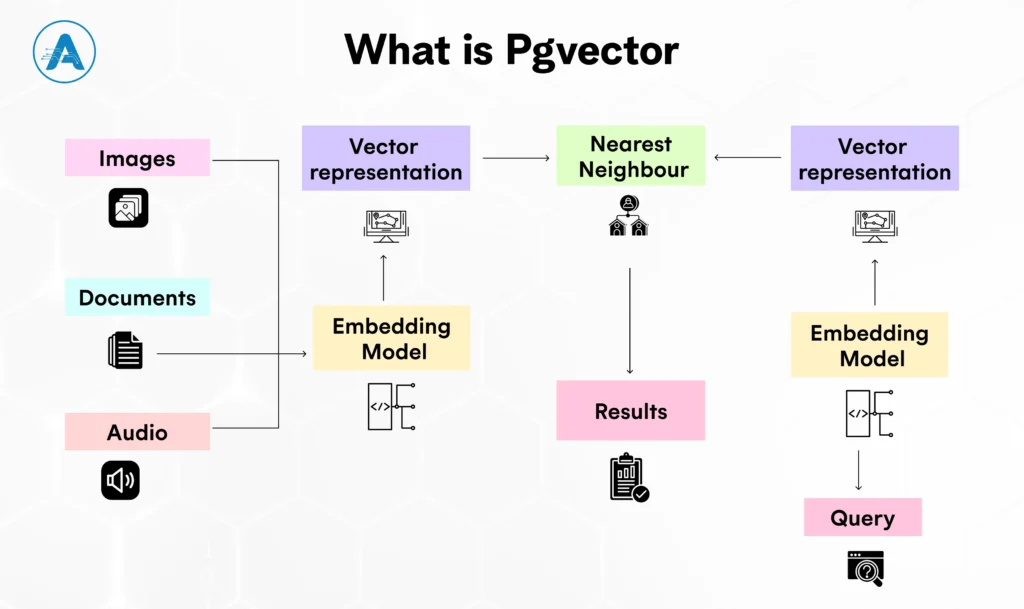

When comparing Pgvector vs Pinecone, Pgvector represents the self-managed, database-centric approach to vector search. Pgvector is an open-source vector extension for PostgreSQL that enables storing and querying embeddings directly within a PostgreSQL database.

While Pgvector extends a familiar database, Pinecone takes a fundamentally different path, one built entirely around scale.

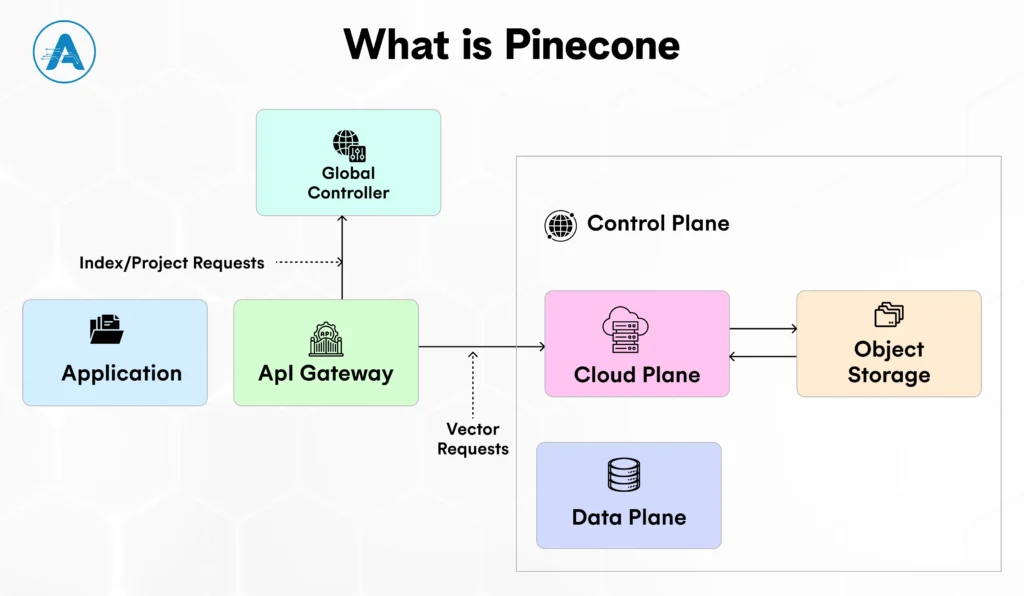

In the Pgvector vs Pinecone discussion, Pinecone represents the managed, purpose-built side of vector databases. Pinecone is a managed, full-fledged vector database, which is designed to store, index, and search high-dimensional embeddings in large scale, to be used with AI and machine learning applications.

Both approaches have been drawn quite clearly, and the actual comparison commences when we put them next to each other.

When evaluating Pgvector vs Pinecone, the real differences show up in how each tool is built, scaled, and operated. Below is a clear, feature-by-feature breakdown to help you choose based on real-world needs, not hype.

| Features | Pgvector | Pinecone |

| Core Architecture | PostgreSQL vector extension | Purpose-built vector database |

| Deployement | Self-hosted inside PostgreSQL | Fully managed cloud service |

| Setup | Requires DB setup and tuning | Minimal setup, ready to use |

| Scalability | Limited horizontal scaling | Designed for massive scale |

| Performance | Best for small–medium datasets | Optimised for large workloads |

| Query Method | SQL + vector similarity | API-based vector queries |

| Index Management | Manual index tuning | Automatic index optimisation |

| Data Control | Full data ownership | Managed with enterprise security |

| Cost Model | Lower infra cost, higher ops | Usage-based, lower ops effort |

Architecture and performance matter, but cost is where many real-world decisions are ultimately made.

When choosing between Pgvector vs Pinecone, the most significant factor for long-term sustainability isn’t just query speed, it’s the monthly bill. In the world of AI data, free seeds can grow into expensive forests, and managed luxury can quickly consume your development budget.

For most developers, Pgvector is the clear winner for cost-efficiency. Because it is an open-source vector extension, the software itself costs nothing.

Pinecone operates on a Database-as-a-Service (DBaaS) model, which means you are paying for the Zero-Ops convenience.

Understanding cost is only half the equation; many teams still make avoidable mistakes during selection.

Selecting a vector database is often where the hype meets the bill. Many teams stumble not because of technical incompetence, but because of a misalignment between their current needs and the tool’s intended use case.

Here are some common pitfalls to avoid:

Selecting a high-scale solution, such as Pinecone to an MVP with a small number of vectors. When your data can fit into the RAM of a standard server, a simple vector extension such as Pgvector is normally sufficient.

Selecting a specialised database and finding out afterwards that your vectors have been divided off of your metadata. This will compel you to write complicated code to synchronise your primary database and your vector store.

Not considering the amount of effort needed to support the system. Pgvector is built where you have to handle your backups and index tuning, whereas Pinecone is built where you have to handle the monthly cost you are paying, and you are dependent on the API.

Making a decision using the speed of raw search as a criterion, and forgetting that the real-world RAG (Retrieval-Augmented Generation) means that the search must be heavily filtered. Pgvector is built to process such complicated SQL filters, and special tools might not do well.

Assuming that because a database is marketed as AI-native, it is automatically better. As the PostgreSQL vector ecosystem has shown, adding vector capabilities to a battle-tested database often yields more reliable results than a brand-new specialised engine.

Not calculating how costs will scale. A cheap starter pod at Pinecone can easily grow into a large pine cone of a bill after you add replicas to it, because of high traffic or when you enlarge your vector size.

Avoiding these pitfalls becomes easier when you evaluate your needs through a clear decision framework.

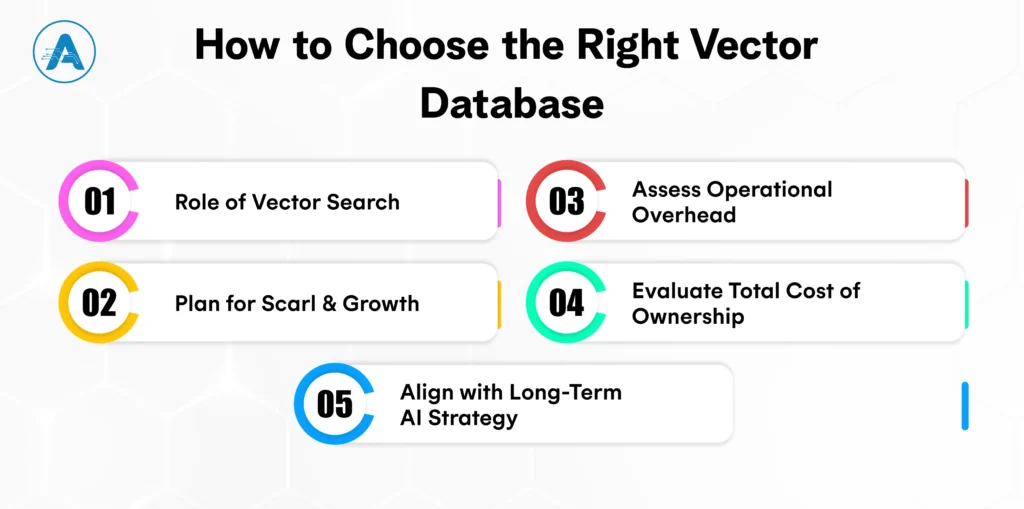

Choosing the right vector database can define whether your AI system scales smoothly or becomes a bottleneck later. Teams comparing Pgvector vs Pinecone are usually at a critical decision point: moving from experimentation to production. Making the right choice early saves months of rework and unnecessary infrastructure cost.

This is often the stage where structured AI application development services help teams assess scale, cost, and long-term maintainability before locking in a vector database.

Start by clarifying how central vector search is to your product. Pgvector can be a feasible solution in case embeddings can accommodate the existing relational workflows. Provided that the core feature, such as semantic search or RAG, is powered by the vector search, then Pinecone is usually more risk-averse in the long-term.

A lot of AI systems perform well during the initial stage and fail as the data increases. Pgvector is an application of controlled workloads, whereas Pinecone is built upon the concept of continuous scaling with equal performance.

Self-managed databases require ongoing tuning, monitoring, and scaling decisions. Managed vector databases lessen this load, but provide service dependencies.

The infrastructure cost does not present the whole picture. All of these factors: engineering time, maintenance effort, and reliability have an impact on long-term ROI.

Your vector database should support your future AI roadmap, not restrict it. Such capabilities as RAG, multi-tenant search, and real-time inference require varying architecture capabilities.

Once the trade-offs are clear, the final step is aligning your choice with long-term product and AI strategy.

The decision of Pgvector vs Pinecone is a question of the extent of scale, control, and operational simplicity that your AI system actually requires. Pgvector is a useful PostgreSQL vector and vector extension option that is suitable when teams desire to have AI functionality near their existing PostgreSQL stack and have a higher focus on flexibility and cost.

Pinecone is constructed around another reality in which search using vectors is infrastructure. Being a purpose-driven system, Pinecone reflects the significance of pine cones in nature: organised, sturdy, and able to grow big without human intervention.

There’s no universal winner in Pgvector vs Pinecone; only the database that best fits your current workload and long-term AI strategy.

Get clarity on use cases, architecture, costs, and timelines with insights from 50+ real-world AI implementations.

The core difference in Pgvector vs Pinecone is architecture. Pgvector is a vector extension inside PostgreSQL, while Pinecone is a purpose-built, fully managed vector database designed for large-scale AI workloads.

Yes, Pgvector works well for RAG when vector search is combined with relational filters using a PostgreSQL vector setup. It’s especially effective for small to medium datasets and internal or early-stage production systems.

Choose Pinecone when vector search is a core feature, your dataset is expected to grow into millions or billions of vectors, or when you want minimal operational overhead with consistent low-latency performance.

Pgvector offers more control but requires ongoing database management. Pinecone is easier to maintain operationally since scaling, indexing, and availability are handled automatically.

Evaluate your current workload, expected growth, team expertise, and long-term AI strategy. The right choice is the one that fits both today’s needs and tomorrow’s scale.

Yes, Pinecone supports metadata filtering, but it’s API-based. Pgvector has an advantage when complex SQL-style filters are required alongside vector similarity.

Pgvector is generally more cost-efficient at smaller scales since it’s an open-source vector extension. Pinecone’s managed model can become more expensive at scale, but it saves significant operational and engineering effort.

Continue exploring AI and technology insights

Design workflows are evolving at breakneck speed. AI Image Generators have officially transitioned from experimental “toys” to essential everyday assets for modern creatives. The…

Have you ever questioned yourself about how AI systems can make a decision that you can actually trust, such as warning about a fraudulent…

Many AI apps for iPhone are very good, but it’s more difficult to decide which ones are worth keeping. With hundreds of AI apps…